A lot has been written about the problems of working with Google App Engine for example here, here and here. The majority of the complaints are from people who used the platform experimentally for a few days, were frustrated by its intricacies and deviation from the typical LAMP and RDBMS paradigms. They wrote angry blogs and went back to their old platforms. The problem is that these complaints are easy to dismiss and hide some real issues with Google App Engine. We on the other hand did our due diligence and then some. We launched a back-end service for the Simpsons Tapped Out to production and have over a hundred thousand concurrent users. We have used pretty much every service GAE has to offer from Data Store to Big Query to the Identity API. We have compiled some of the major pitfalls in this article to help you make an educated decision as to whether Google App Engine is right for you and what you need to look out for.

Hidden Arbitrary Quota Limits

After a few months of hard work we soft-launched our application in a few countries and traffic gradually picked up. No surprises, we all patted ourselves on the back and did our global launch. The service performed as expected and we were happily chugging along. Part of that chugging was using the GAE App Identity service to sign authorization tokens. One fine day we found out that all our logins were failing, apparently there is a quota limit of 1.84 millions requests per day to the App Identity service. After which google “helps you control your costs” by refusing all your requests to the API for the day. Let me step back and let that sink in. We have a paid account with google on the highest tier of service and fully expected millions of requests per day and are willing to pay for them. Google decided that we should save money by denying all requests for the remainder of the day. Now just to clarify its not that we weren’t watching our quota closely enough, this quota of calls to the Identify API is not shown on the app stats page (or any page for that matter). None of the documentation mentions that there is a hard limit of 1.84 million requests to the API, and at no point over the past days when our call count was in the many hundreds of thousands of requests did someone at google decide to alert us that our app would suddenly stop working soon.

Hidden Arbitrary Library behaviour

While we are on the subject of Auth tokens, we noticed another strange issue while building the token verification service. We send our Auth token as a header with all requests so that various services can check user authenticity and authorization. The fact that this token is in the header makes it easy for us to use a servlet filter and check authorization before any business logic code is hit. What could be simpler right? Well what if every so often the header disappeared, no warning no error the header just magically disappeared. We realized that if any Http Header happens to be longer than a certain length (approximately 400 bytes) the google http client will silently drop the header and issue the http request with out it. Now we understand some proxies mangle headers and browsers don’t like big headers so google may want to dissuade us from using big headers. 400 bytes seems somewhat low as a hard limit but lets say we agree that it is reasonable. How would it ever be reasonable to silently drop the header on the client side and send the request anyway. Perhaps a warning in the client log that the header was too large and dropped would be useful. If the programmer explicitly set a header its a fair bet the server needs the information in that header. Why issue the request anyway without the header? Wouldn’t it have been more clear just to throw an exception and tell the client developer that big headers are not allowed? That would be too easy, instead we had to spend days of trial and error to figure out exactly why the requests were getting mangled.

No pre-scaling mechanism

As any one who has launched a large scale service knows, deployments to production are tricky. Deployments under load are more so. That is why most deployments happen in off-peak hours. However for us even our deepest trough has a few tens of thousand active users and we want to do hot deploys. With EC2 we would bring up a copy of the prod environment and point the load balancer at the new environment while keeping the old one running for connected clients. Technically it should have been even simpler with GAE, you deploy a new version of your server and make the new version default. The problem is that GAE is really bad at scaling rapidly for load. It takes some time for the GAE scheduler to detect that response times are rising and instances need to be launched. During this time your clients will get time out errors and very high response times. If the scheduler cannot find an instance to serve your request it will launch another instance and send the request there. Unfortunately, instance launch can take up to 30s, which means this request will be at least 30s long. We can side step this problem by using resident instances, i.e. if we know we are going to be getting a lot of traffic we can pre-launch instances. This is all great, full marks for App engine but the one small detail that completely negates all of these features; you can only launch resident instances for the default version of the app. This means that when we are doing code pushes and we deploy to the new version of the app there is no way to pre-launch instances for the new version. When we make the new version default it will have exactly 1 instance. Even at minimum load our services needs about 30 instances. If we ever had to do a critical fix under load when our app is running at about 300 instances we would be have massive client disruption. We contacted google about this issue and the official suggestion was to run a load test against the prod environment with dummy users to trick the scheduler into running more instances and then quickly shut down the load test and flick the switch to send real users to the new version.

The scheduler loop of death

A related problem to the one mentioned above, if your instance startup time exceeds 30s then the scheduler may not be able to bring up an instance cleanly. What does the scheduler do in such a scenario? It starts more instances. What if they don’t start in time? It launches a whole bunch more. We asked our Google support contact what to do and the answer was to use the warmup request to warm up your code and JVM. This worked like a charm. The response times started coming down and then one day while our service was happily chugging along, the scheduler decided to launch a few instances but the startup time was over 30s. This caused the launch to fail, the scheduler decided to launch more instances which also failed as the startup system was already overloaded and this cycle repeated it self until we had many thousands of instances running but only a few serving the requests. In fact since we had a request to memcached in the warmup the massive number of instances overloaded the memcached service and even the running instances were not able to serve traffic. Before you say Aha! why did you have memcached requests in the startup we would like to point out warming up cache is a very common startup use case. and we had fewer than ten calls to memcached in startup. Since then we have optimized our start up times but we still see delays in startup when we try to launch a few hundred instances at once. Unfortunately, once every deployment we need to push a new version, which necessities a new array. Under our load we need about 300 instances to handle running load. As we mentioned earlier there is no easy way of using resident instances to bring up these instances ahead of time.

Under-powered application front-end instances

The GAE instances are greatly under-powered. We had a simple use case where we used RSA cryptography to sign our authorization tokens. We accept that it is a CPU intensive task and we are willing to take a performance hit. However, a quick benchmark showed that the signature generation takes on average 10ms on a Ubuntu VM on my rather modest laptop. The same code on Google App Engine under no load takes anywhere from 500 to 600ms. This time does not include network latency or any scheduler overhead, this is just the time taken to compute the signature.

A small benchmark for computing an RSA signature:

A VM on my laptop 10ms

GAE F1 (600MHz, 128MB) 500-600ms

GAE F2 (1200MHz, 256MB) 450-500ms

GAE F4 (2400MHz, 512MB) 350-450msIf you scour Google Groups discussions, you will find that the recommended approach for doing something like this is to offload this work to an external service with more computational resources and then make an HTTP call. In fact GAE’s APP Identity API offers exactly this. However, the API has an arbitrary daily hidden quota of 1.84 Million calls as we mention above and there is no way of increasing it at the moment.

Under-performing Google Data Store and under-provisioned Cache

It would be fair to argue that most web applications are not CPU intensive and spend a significant amount of time waiting on cache and the backend data store. So maybe google skimped on CPU to beef up the Data Store and Cache services. But alas that is not so, indexed reads on the data store take anywhere from 150ms to 250ms under modest load and writes range from 300ms to over 500ms on occasions. We have seen these latencies when dealing with approximately 1500 requests per second sent to one of our services by Simpsons Tapped Out.

These latencies mandate that everything that can be cached must be cached. Fortunately, Google Cache is reasonably fast and the latencies range from as low as 10ms to a typical of 20-40ms. Here’s the kicker: the cache is automatically scaled out/back by Google a number of times in a day for a service under load. What this essentially does is that it invalidates a good chunk of the cached data, generally resulting in response time spikes and leads to service instability. We can’t think of any reason why the cache is being provisioned for exact fit. Cache provides a layer of stability for the service and serves as the only safeguard for protecting the less elastic backend data store. In addition the cache is a shared resource between multiple applications, hence additional load on some completely unrelated application could not only cause response time spikes on your service but also cause re-scaling. While this is nominally true for all cloud based infrastructure with GAE we see this several times a day and the spikes can significant.

User Interface for Androids

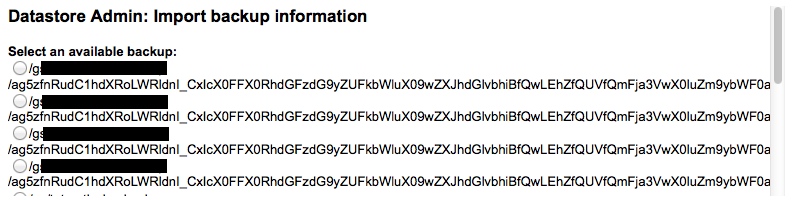

Another thing I would like to highlight is the image above, for me it symbolizes what is wrong with GAE. Its an actual screen shot from a menu in GAE console for restoring backups. These backups were generated through google’s standard tool and this is the User interface that allows you to select which one to restore from. The fact that some google developer somewhere wrote this UI looked at it and said “Sure, looks good you can select the backup you want lets ship it” scares me. It shows either an utter disregard for the usability of the server or a complete disconnect between team members when implementing related functions. Neither of these cases give me confidence that whats “under the hood” of google app engine has been thought through very well. This is important because you as a service developer are relying on GAE to have good plumbing under the hood as you have no control over it. If I had to write our service again I would probably go with Amazon Elastic Beanstalk or something else. For now it all kind of works and we are too far down this path so we will keep our fingers crossed.